AI Leaders are Replacing Regulatory Guesswork with Stable, Agile Internal Operating Models

Key Points

Global AI regulation keeps shifting and conflicting, leaving leaders stuck between what they can responsibly build and constantly changing rules that create internal confusion and burnout.

Olivia Gambelin, Founder of Ethical Intelligence, reframes responsible AI as a change management challenge rooted in people, processes, and ownership, not law.

Her solution centers on building stable internal frameworks with clear ownership, embedded risk checks, and team-level accountability, so regulation becomes a layer to add, not a system to rebuild.

If you have clear internal principles and processes, compliance becomes something you layer on, not something that forces you to rebuild how your teams operate.

Olivia Gambelin

Founder

Ethical Intelligence

AI’s biggest source of chaos right now is the global regulatory mess surrounding it. As laws shift, stall, and contradict one another, many organizations find themselves stuck between what they can responsibly build and what they’re allowed to deploy. Leaders who wait for regulatory certainty end up pulling their teams in different directions each time the rules change, while those who set a clear internal framework early give their organizations the stability and confidence to move forward anyway.

Olivia Gambelin is a world-renowned AI ethicist, strategic advisor, and award-winning author of Responsible AI: Implement an Ethical Approach in Your Organization. As the Founder of Ethical Intelligence, she draws on over a decade of experience advising Fortune 500 leadership on the adoption and development of AI. A prominent TEDx Speaker, her advice for navigating the current moment is simple: stop chasing regulations and start building.

“If you have clear internal principles and processes, compliance becomes something you layer on, not something that forces you to rebuild how your teams operate,” says Gambelin. Her philosophy is born from an unstable global regulatory environment.

The EU’s landmark AI Act, once seen as the gold standard, is now sowing confusion as regulators signal they are caving under pressure to scale it back. The reversal leaves many companies asking themselves why they should aim for a benchmark that keeps moving. Meanwhile, in the US, a federal executive order threatens to pull funding from states with AI laws it deems too onerous, adding to the chaos.

Cultural readiness: To break the pattern, Gambelin advises leaders to turn their focus toward building a stable internal foundation. She reframes responsible AI as an issue of change management, recasting it not as a legal problem, but a human one. Her approach acknowledges the organizational impact of AI, positioning cultural readiness as a key determinant of success. “Responsible AI isn’t about predicting the next regulation,” she states. “It’s about giving people one clear, stable way to work when everything around them keeps changing.”

Build for agility: The point isn’t to chase every regulatory shift as it happens, says Gambelin, but to design systems and processes that can absorb change without forcing teams to constantly reset how they work. “Instead of having to change your infrastructure and your operations every single time a regulator decides to do something different, you’re built to actually hit compliance on any of these new regulations.”

The alternative is a leadership vacuum where internal politics thrive. When efforts are fragmented and ownership is unclear, teams can become exhausted by constantly changing expectations and internal friction, ultimately stalling adoption. Gambelin warns that this creates a political battleground where consolidating resources can feel like a power grab. “If everyone’s responsible, no one’s responsible,” she says.

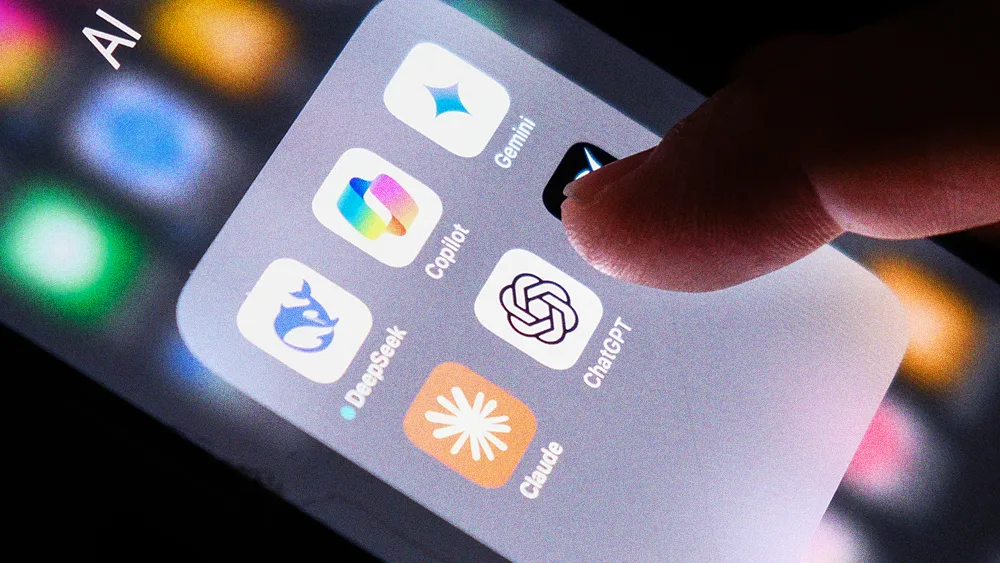

Buyer beware: Gambelin grounds the idea of infrastructure in a process every organization already relies on: procurement. With the market flooded by AI washing, she points to vendor evaluation as a practical place to embed governance without disrupting day-to-day work. “Many of these vendors make claims they cannot do that are outside the scope of AI possibility, so you actually have to check,” she says. By making that check a standard, mandatory step in procurement, companies build a governance muscle that can tighten or loosen as regulations change, without forcing the entire organization back into retraining mode.

Accountable ownership: Rather than trying to turn every employee into an ethicist, Gambelin focuses on simple, repeatable workflows that connect teams to expertise at the moments that matter. That structure is reinforced by setting clear AI principles at the company level, then asking individual teams to define what those principles mean in their own day-to-day work. “That kind of training is a collaborative, bottom-up approach where it doesn’t feel like these principles are being imposed,” she says. “It’s more of accountable ownership, and that really helps them stick.”

For leaders navigating the uncertainty, Gambelin’s final advice reframes the conversation from a question of ethics to one of project viability. She warns that the hype-driven strategy of throwing technology at undefined problems is a strategy that often fails. The high failure rate of AI initiatives builds a powerful business case for creating a responsible foundation first. Gambelin asserts that a responsible AI framework provides a clear return on investment.

“80 to 90 percent of all AI initiatives fail. Point blank, fail. That is a tremendous amount of money and a tremendous amount of resources and time going to something that is most likely going to fail,” Gambelin concludes. “It’s been proven that responsible AI practices reduce that risk by at least 30%. When you look at the numbers, there’s no denying it.”

Related articles

TL;DR

Global AI regulation keeps shifting and conflicting, leaving leaders stuck between what they can responsibly build and constantly changing rules that create internal confusion and burnout.

Olivia Gambelin, Founder of Ethical Intelligence, reframes responsible AI as a change management challenge rooted in people, processes, and ownership, not law.

Her solution centers on building stable internal frameworks with clear ownership, embedded risk checks, and team-level accountability, so regulation becomes a layer to add, not a system to rebuild.

Olivia Gambelin

Ethical Intelligence

Founder

Founder

AI’s biggest source of chaos right now is the global regulatory mess surrounding it. As laws shift, stall, and contradict one another, many organizations find themselves stuck between what they can responsibly build and what they’re allowed to deploy. Leaders who wait for regulatory certainty end up pulling their teams in different directions each time the rules change, while those who set a clear internal framework early give their organizations the stability and confidence to move forward anyway.

Olivia Gambelin is a world-renowned AI ethicist, strategic advisor, and award-winning author of Responsible AI: Implement an Ethical Approach in Your Organization. As the Founder of Ethical Intelligence, she draws on over a decade of experience advising Fortune 500 leadership on the adoption and development of AI. A prominent TEDx Speaker, her advice for navigating the current moment is simple: stop chasing regulations and start building.

“If you have clear internal principles and processes, compliance becomes something you layer on, not something that forces you to rebuild how your teams operate,” says Gambelin. Her philosophy is born from an unstable global regulatory environment.

The EU’s landmark AI Act, once seen as the gold standard, is now sowing confusion as regulators signal they are caving under pressure to scale it back. The reversal leaves many companies asking themselves why they should aim for a benchmark that keeps moving. Meanwhile, in the US, a federal executive order threatens to pull funding from states with AI laws it deems too onerous, adding to the chaos.

Cultural readiness: To break the pattern, Gambelin advises leaders to turn their focus toward building a stable internal foundation. She reframes responsible AI as an issue of change management, recasting it not as a legal problem, but a human one. Her approach acknowledges the organizational impact of AI, positioning cultural readiness as a key determinant of success. “Responsible AI isn’t about predicting the next regulation,” she states. “It’s about giving people one clear, stable way to work when everything around them keeps changing.”

Build for agility: The point isn’t to chase every regulatory shift as it happens, says Gambelin, but to design systems and processes that can absorb change without forcing teams to constantly reset how they work. “Instead of having to change your infrastructure and your operations every single time a regulator decides to do something different, you’re built to actually hit compliance on any of these new regulations.”

The alternative is a leadership vacuum where internal politics thrive. When efforts are fragmented and ownership is unclear, teams can become exhausted by constantly changing expectations and internal friction, ultimately stalling adoption. Gambelin warns that this creates a political battleground where consolidating resources can feel like a power grab. “If everyone’s responsible, no one’s responsible,” she says.

Buyer beware: Gambelin grounds the idea of infrastructure in a process every organization already relies on: procurement. With the market flooded by AI washing, she points to vendor evaluation as a practical place to embed governance without disrupting day-to-day work. “Many of these vendors make claims they cannot do that are outside the scope of AI possibility, so you actually have to check,” she says. By making that check a standard, mandatory step in procurement, companies build a governance muscle that can tighten or loosen as regulations change, without forcing the entire organization back into retraining mode.

Accountable ownership: Rather than trying to turn every employee into an ethicist, Gambelin focuses on simple, repeatable workflows that connect teams to expertise at the moments that matter. That structure is reinforced by setting clear AI principles at the company level, then asking individual teams to define what those principles mean in their own day-to-day work. “That kind of training is a collaborative, bottom-up approach where it doesn’t feel like these principles are being imposed,” she says. “It’s more of accountable ownership, and that really helps them stick.”

For leaders navigating the uncertainty, Gambelin’s final advice reframes the conversation from a question of ethics to one of project viability. She warns that the hype-driven strategy of throwing technology at undefined problems is a strategy that often fails. The high failure rate of AI initiatives builds a powerful business case for creating a responsible foundation first. Gambelin asserts that a responsible AI framework provides a clear return on investment.

“80 to 90 percent of all AI initiatives fail. Point blank, fail. That is a tremendous amount of money and a tremendous amount of resources and time going to something that is most likely going to fail,” Gambelin concludes. “It’s been proven that responsible AI practices reduce that risk by at least 30%. When you look at the numbers, there’s no denying it.”