In the absence of human agents, digital assistants can become barriers instead of bridges

Key Points

Intel’s Senior HR Data Analyst Zsolt Olah discusses how chatbots often fail to meet consumer expectations.

Many users experience frustration with chatbots due to a mismatch between their perceived intelligence and actual functionality.

Businesses face increased customer dissatisfaction when chatbots fail to replace human support effectively.

From the customer's perspective, I would summarize the problem in one word: affordance. It comes down to the perceived versus the actual use of these chatbots.

Zsolt Olah

Senior HR Data Measurement Analyst

Intel Corporation

We’ve all been there: stuck in a digital back-and-forth with a customer service chatbot that seems designed to misunderstand. A clear request is met with irrelevant options, acting less like an assistant and more like an active barrier to the help you need. It’s a near-universal frustration, and it points to a failure of both design and expectation.

The problem, according to Zsolt Olah, a Senior HR Data Measurement Analyst at Intel Corporation, isn’t just the limitation of the bots, but it’s the difference between their ability and our perception of their ability. With a background that allows him to “build the bridge between the language of business, the language of data, and the language of technology,” Olah argues that to understand why your chatbot falls short, you first have to understand the trap of its interface.

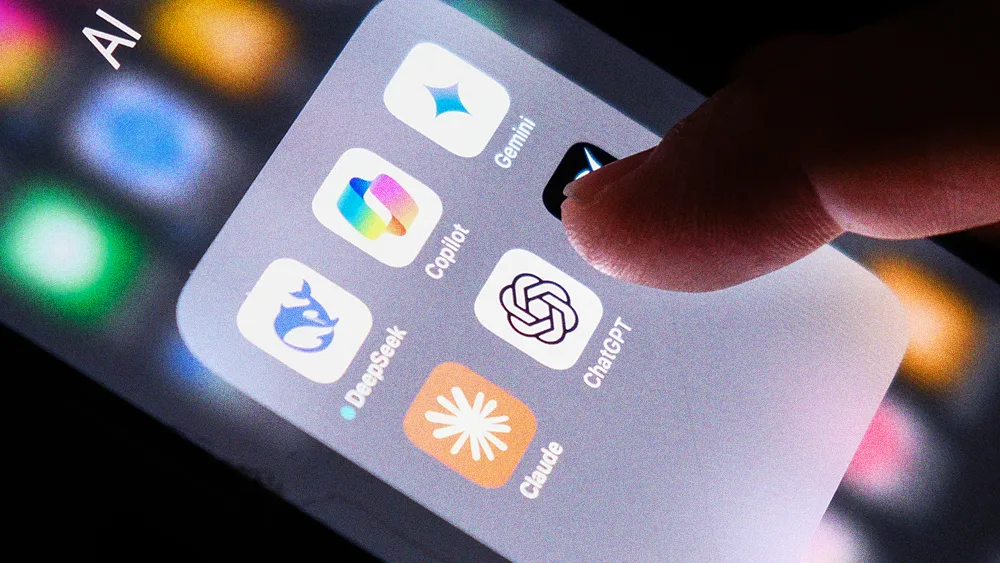

The affordance trap: “From the customer’s perspective, I would summarize the problem in one word: affordance,” he says. “It comes down to the perceived versus the actual use of these chatbots.” The simple, open-ended chat window affords the impression of intelligence, creating a massive gap between what users expect and what the system can deliver, especially in a world that has seen what tools like ChatGPT are capable of.

Lack of understanding: “The problem with chatbots today is that they use the same interface no matter what data is behind them. Our expectation is that we’re dealing with some sort of intelligent thing. I assume that they at least understand what my problem is, and if they cannot help me, that they’ll at least tell me that. But they often don’t.”

The problem is compounded by the business strategy that deployed the bot in the first place. “Companies are reducing human resources for call centers because they think a chatbot can provide support,” Olah explains.

Humans wanted: “But if that bot doesn’t pick up the slack, you have double the problems: you get a frustrated customer, and then they have to wait a long time to talk to a human because you don’t have enough of them.”

Unnecessary anthropomorphizing: To make matters worse, some designs actively erode trust through small deceptions. “You see the fake ‘agent is typing…’ notification. They’re not typing,” Olah says. “The agents are talking to multiple people at once, and they want to make sure you stay connected while they talk to someone else.” It’s a small detail that signals a larger disrespect for the customer’s intelligence and time.

Olah says that behind that sleek interface often lies a system with frustrating simplicity. “Most bots in the absence of humans basically function like a glorified FAQ.” The result is a predictable, learned behavior. “Users end up repeatedly typing in ‘agent’ in an attempt to speak to a real person, just like we did on the phone when we hammered the zero because we didn’t want to talk to the IVR.” Olah says.

Convenience over trust: Looking ahead, Olah predicts the next evolution isn’t just better conversation, but a shift toward AI agents that can take direct action, like fixing a billing error. “The next phase isn’t just conversation, it’s action. Your chatbot will become an agent that can act on your behalf,” he says. “And that presents a fascinating question: how long will it take for you to be convinced by convenience over trust?” For Olah, the answer depends entirely on the stakes. “If it’s simple, like just fix something on my website, I don’t care—just do it,” he concludes. “But if it’s about healthcare or a critical issue with real consequences, I’d rather talk to a human to make sure they understand what they’re doing.”

Once AI agents are able to take action for you in a way that's convenient, are you going to trust them to do that? Or are you going to wait in line for two hours to speak to an agent?

Zsolt Olah

Senior HR Data Measurement Analyst

Intel Corporation

Once AI agents are able to take action for you in a way that's convenient, are you going to trust them to do that? Or are you going to wait in line for two hours to speak to an agent?

Zsolt Olah

Senior HR Data Measurement Analyst

Intel Corporation

Related articles

TL;DR

Intel’s Senior HR Data Analyst Zsolt Olah discusses how chatbots often fail to meet consumer expectations.

Many users experience frustration with chatbots due to a mismatch between their perceived intelligence and actual functionality.

Businesses face increased customer dissatisfaction when chatbots fail to replace human support effectively.

Zsolt Olah

Intel Corporation

Senior HR Data Measurement Analyst

Senior HR Data Measurement Analyst

We’ve all been there: stuck in a digital back-and-forth with a customer service chatbot that seems designed to misunderstand. A clear request is met with irrelevant options, acting less like an assistant and more like an active barrier to the help you need. It’s a near-universal frustration, and it points to a failure of both design and expectation.

The problem, according to Zsolt Olah, a Senior HR Data Measurement Analyst at Intel Corporation, isn’t just the limitation of the bots, but it’s the difference between their ability and our perception of their ability. With a background that allows him to “build the bridge between the language of business, the language of data, and the language of technology,” Olah argues that to understand why your chatbot falls short, you first have to understand the trap of its interface.

The affordance trap: “From the customer’s perspective, I would summarize the problem in one word: affordance,” he says. “It comes down to the perceived versus the actual use of these chatbots.” The simple, open-ended chat window affords the impression of intelligence, creating a massive gap between what users expect and what the system can deliver, especially in a world that has seen what tools like ChatGPT are capable of.

Lack of understanding: “The problem with chatbots today is that they use the same interface no matter what data is behind them. Our expectation is that we’re dealing with some sort of intelligent thing. I assume that they at least understand what my problem is, and if they cannot help me, that they’ll at least tell me that. But they often don’t.”

The problem is compounded by the business strategy that deployed the bot in the first place. “Companies are reducing human resources for call centers because they think a chatbot can provide support,” Olah explains.

Humans wanted: “But if that bot doesn’t pick up the slack, you have double the problems: you get a frustrated customer, and then they have to wait a long time to talk to a human because you don’t have enough of them.”

Unnecessary anthropomorphizing: To make matters worse, some designs actively erode trust through small deceptions. “You see the fake ‘agent is typing…’ notification. They’re not typing,” Olah says. “The agents are talking to multiple people at once, and they want to make sure you stay connected while they talk to someone else.” It’s a small detail that signals a larger disrespect for the customer’s intelligence and time.

Zsolt Olah

Intel Corporation

Senior HR Data Measurement Analyst

Senior HR Data Measurement Analyst

Olah says that behind that sleek interface often lies a system with frustrating simplicity. “Most bots in the absence of humans basically function like a glorified FAQ.” The result is a predictable, learned behavior. “Users end up repeatedly typing in ‘agent’ in an attempt to speak to a real person, just like we did on the phone when we hammered the zero because we didn’t want to talk to the IVR.” Olah says.

Convenience over trust: Looking ahead, Olah predicts the next evolution isn’t just better conversation, but a shift toward AI agents that can take direct action, like fixing a billing error. “The next phase isn’t just conversation, it’s action. Your chatbot will become an agent that can act on your behalf,” he says. “And that presents a fascinating question: how long will it take for you to be convinced by convenience over trust?” For Olah, the answer depends entirely on the stakes. “If it’s simple, like just fix something on my website, I don’t care—just do it,” he concludes. “But if it’s about healthcare or a critical issue with real consequences, I’d rather talk to a human to make sure they understand what they’re doing.”