How leaders are redefining org charts around three ‘Cruxes of Trust’ for agentic AI

Key Points

Co-Founder and CEO of agentic AI startup Wayfound Tatyana Mamut, PhD, explains why legal and organizational accountability remains firmly with humans, even in the age of agentic AI.

She compares the hype around a network ‘Orchestration Graph’ of humans and agents with the practical need for traditional hierarchies.

Rather than eliminating the organizational chart, Manut says, AI is instead redefining it.

As AI handles more mechanical tasks, the most valuable human skills are judgment, taste, and relationship-building, the three cruxes of trust.

Will agent orchestration erase the org chart? The answer is 'no.' Humans are still legally accountable and responsible. You still need a human to point the finger at when something goes wrong.

Tatyana Mamut, PhD

Co-Founder and CEO

Wayfound

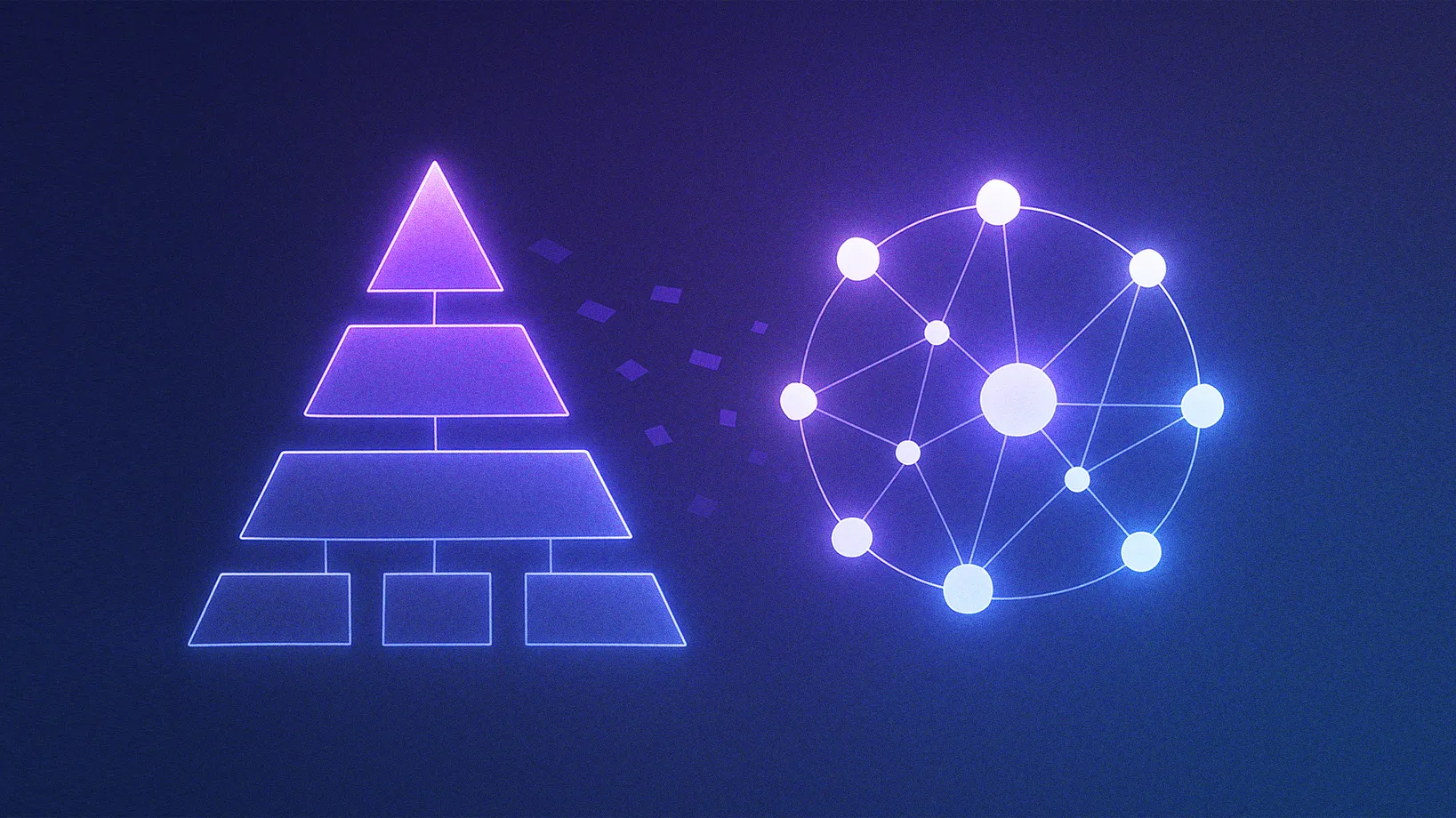

Yet another new theory is gaining traction in Silicon Valley. ‘The Orchestration Graph‘ suggests that companies will become fluid networks of AI agents and humans, eventually dissolving traditional hierarchies altogether. But hiding behind the promise of unprecedented efficiency is a simple yet often-ignored truth: when things go wrong, companies are the ones held responsible, not AI. A recent lawsuit against Air Canada serves as an excellent example of the gray area around AI liability that has some experts concerned.

To learn more, we spoke with Tatyana Mamut, PhD, Co-Founder and CEO of AI guardrail company Wayfound. As a former executive at AWS and Salesforce, Mamut has seen plenty of organizational design fads come and go. The current hype around agent-led organizations, she cautioned, is just the latest attempt to rebrand yet another pipe dream.

The holacracy myth: The idea of a flat, AI-driven organization is inherently flawed, Mamut said. And for some seasoned executives, it might even feel dangerously familiar. Like the holacracy management trend circa 2016, she explained, widespread attempts to introduce decentralized structures tend to fizzle out in favor of traditional hierarchies. From Mamut’s view, the fundamental principles of business and law remain unchanged by AI. “Will agent orchestration erase the org chart? The answer is ‘no.’ Humans are still legally accountable and responsible. You still need a human to point the finger at when something goes wrong.”

The logic of business and law: The principle of human accountability is already being confirmed in court, Mamut said. While proponents of agent-led systems imagine a decentralized network making decisions, recent lawsuits suggest that the defense may be weakening. “The courts just aren’t going to have any of it. The agents are not legally liable. You are legally liable. The humans are legally liable.”

Rather than eliminating the org chart, Mamut explained, AI must redraw it. Even the rise of agents doesn’t eliminate the need for structure, she said. But it will change where the work gets done and where the bottlenecks form.

Reconfiguring the org chart: A fully flat, agent-led organization is like an orchestra with no conductor, she said. “The reason organizations exist is to align actors, human or otherwise, around goals and priorities. This necessitates hierarchy.” The challenge for leaders is in redesigning their organizations around this new operational reality. “The roadblock has moved from doing the work to approving the work,” Mamut said. “The question of who has the judgment to approve the work of AI agents is a real barrier to adoption.”

The type of shift Mamut described would consolidate previously separate functions like sales, marketing, product, and engineering under a single leader. But this reconfigured hierarchy also creates a paradox: If humans are ultimately accountable, how can they manage millions of agent interactions happening at speed and scale?

The supervisor solution: The solution is supervision, Mamut said, not orchestration. In her proposed model, a ‘guardian agent’ functions like a chief of staff. Here, she explained, “AI agents supervise the work while humans think strategically. But, because people are accountable, they need a supervisor who’s actually monitoring whether the agents are doing what the employee actually wants.”

The concept, also identified by firms like Gartner, represents a critical missing piece in the enterprise AI stack. As AI automates more mechanical tasks, Mamut concluded, leaders must invest in the uniquely human skills that cannot be replicated. “Judgment, taste, relationships, these are the things that are not going away. These are the crux points of trust.”

In closing, she shared a story about an engineering team that could build anything the business leader asked for, but always asked one question: What does ‘good’ look like? “They’d say, ‘You need to tell me what good looks like so I can build an agent that actually accomplishes that outcome.’ The business workflow or the workflow process by itself just wasn’t enough. Humans actually make millions of little micro-decision judgments, tastes, and relationships. That nuance is something AI agents currently can’t replace.”

The reason organizations exist is to align actors, human or otherwise, around goals and priorities. This necessitates hierarchy.

Tatyana Mamut, PhD

Co-Founder and CEO

Wayfound

The reason organizations exist is to align actors, human or otherwise, around goals and priorities. This necessitates hierarchy.

Tatyana Mamut, PhD

Co-Founder and CEO

Wayfound

Related articles

TL;DR

Co-Founder and CEO of agentic AI startup Wayfound Tatyana Mamut, PhD, explains why legal and organizational accountability remains firmly with humans, even in the age of agentic AI.

She compares the hype around a network ‘Orchestration Graph’ of humans and agents with the practical need for traditional hierarchies.

Rather than eliminating the organizational chart, Manut says, AI is instead redefining it.

As AI handles more mechanical tasks, the most valuable human skills are judgment, taste, and relationship-building, the three cruxes of trust.

Tatyana Mamut, PhD

Wayfound

Co-Founder and CEO

Co-Founder and CEO

Yet another new theory is gaining traction in Silicon Valley. ‘The Orchestration Graph‘ suggests that companies will become fluid networks of AI agents and humans, eventually dissolving traditional hierarchies altogether. But hiding behind the promise of unprecedented efficiency is a simple yet often-ignored truth: when things go wrong, companies are the ones held responsible, not AI. A recent lawsuit against Air Canada serves as an excellent example of the gray area around AI liability that has some experts concerned.

To learn more, we spoke with Tatyana Mamut, PhD, Co-Founder and CEO of AI guardrail company Wayfound. As a former executive at AWS and Salesforce, Mamut has seen plenty of organizational design fads come and go. The current hype around agent-led organizations, she cautioned, is just the latest attempt to rebrand yet another pipe dream.

The holacracy myth: The idea of a flat, AI-driven organization is inherently flawed, Mamut said. And for some seasoned executives, it might even feel dangerously familiar. Like the holacracy management trend circa 2016, she explained, widespread attempts to introduce decentralized structures tend to fizzle out in favor of traditional hierarchies. From Mamut’s view, the fundamental principles of business and law remain unchanged by AI. “Will agent orchestration erase the org chart? The answer is ‘no.’ Humans are still legally accountable and responsible. You still need a human to point the finger at when something goes wrong.”

The logic of business and law: The principle of human accountability is already being confirmed in court, Mamut said. While proponents of agent-led systems imagine a decentralized network making decisions, recent lawsuits suggest that the defense may be weakening. “The courts just aren’t going to have any of it. The agents are not legally liable. You are legally liable. The humans are legally liable.”

Tatyana Mamut, PhD

Wayfound

Co-Founder and CEO

Co-Founder and CEO

Rather than eliminating the org chart, Mamut explained, AI must redraw it. Even the rise of agents doesn’t eliminate the need for structure, she said. But it will change where the work gets done and where the bottlenecks form.

Reconfiguring the org chart: A fully flat, agent-led organization is like an orchestra with no conductor, she said. “The reason organizations exist is to align actors, human or otherwise, around goals and priorities. This necessitates hierarchy.” The challenge for leaders is in redesigning their organizations around this new operational reality. “The roadblock has moved from doing the work to approving the work,” Mamut said. “The question of who has the judgment to approve the work of AI agents is a real barrier to adoption.”

The type of shift Mamut described would consolidate previously separate functions like sales, marketing, product, and engineering under a single leader. But this reconfigured hierarchy also creates a paradox: If humans are ultimately accountable, how can they manage millions of agent interactions happening at speed and scale?

The supervisor solution: The solution is supervision, Mamut said, not orchestration. In her proposed model, a ‘guardian agent’ functions like a chief of staff. Here, she explained, “AI agents supervise the work while humans think strategically. But, because people are accountable, they need a supervisor who’s actually monitoring whether the agents are doing what the employee actually wants.”

The concept, also identified by firms like Gartner, represents a critical missing piece in the enterprise AI stack. As AI automates more mechanical tasks, Mamut concluded, leaders must invest in the uniquely human skills that cannot be replicated. “Judgment, taste, relationships, these are the things that are not going away. These are the crux points of trust.”

In closing, she shared a story about an engineering team that could build anything the business leader asked for, but always asked one question: What does ‘good’ look like? “They’d say, ‘You need to tell me what good looks like so I can build an agent that actually accomplishes that outcome.’ The business workflow or the workflow process by itself just wasn’t enough. Humans actually make millions of little micro-decision judgments, tastes, and relationships. That nuance is something AI agents currently can’t replace.”