EY Talent Leader on Why Companies Can’t Build AI Skills Inside Outdated Systems

Key Points

Darryl Wright, Ernst & Young’s National Lead for Leadership, Talent, & Future of Work, explains why AI adoption is about changing the systems people operate in.

His approach starts with leadership, creating an immersive and psychologically safe program for executives to learn about AI long before any company-wide rollout.

Wright recommends sparking bottom-up momentum by making learning personal and social, incentivizing employees to experiment and share their findings.

He stresses the need to proactively manage the workforce by understanding who needs upskilling versus reskilling.

You can’t train people into new habits inside old systems. Before you ask employees to think differently, you have to rebuild the environment that shapes how they work. That means creating safe spaces for leaders to learn, demystifying the technology, and building confidence long before a single use case hits the floor.

Darryl Wright

National Lead for Leadership, Talent, and Future of Work

Ernst & Young

Most companies are getting AI adoption backward. They spend months trying to shift employee mindsets while the systems around them stay rooted in the past. Transformation stalls because behavior follows structure, not slogans, and the outcome is a workforce fluent in AI buzzwords but left without the tools to turn theory into action.

Darryl Wright has spent his career watching how big ideas collide with old systems. As Partner at Ernst & Young and the firm’s National Lead for Leadership, Talent, and Future of Work, he’s seen how even the boldest transformation efforts stall when structure lags behind ambition. Drawing on decades of experience at Scotiabank and Duke Corporate Education, Wright’s philosophy is built on the idea that you can’t train people into new habits inside of old systems.

“You can’t train people into new habits inside old systems. Before you ask employees to think differently, you have to rebuild the environment that shapes how they work. That means creating safe spaces for leaders to learn, demystifying the technology, and building confidence long before a single use case hits the floor. Real transformation starts with the system, not the slogans,” says Wright.

Don’t blame the player: Wright argues that true change has less to do with attitude and more to do with architecture. “You can’t change how people think in a workshop and then send them back into structures that reward the old way of working,” he explains. “You have to redesign the system that their new mindset will live in.”

To counter the fear and resistance that can hinder progress, Wright’s approach begins at the top. He advocates for creating a psychologically safe space for leaders to learn long before any company-wide rollout. By building a clear, well-governed framework first, his goal is to take the mystery out of AI and replace anxiety with confidence.

Learn before you leap: “We designed an immersive three-day program that gave executives a safe space to learn and ask questions. Day one was about the art of the possible, showing how others were already using AI to solve real problems. Day two was deep knowledge building, breaking down how the technology actually works. Day three focused on culture and collaboration. We didn’t talk about use cases at all—it was entirely about the environment these leaders would need to create,” Wright explains.

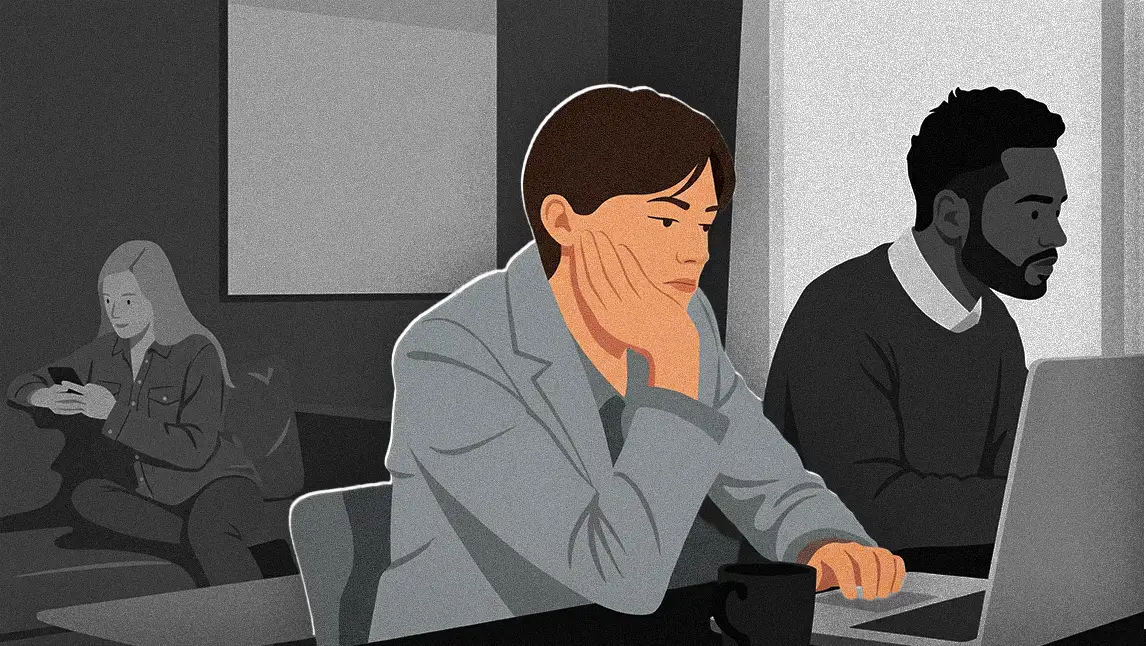

Once leadership is aligned, the focus then turns to translating that top-down strategy into bottom-up engagement. A key, Wright said, is to make learning personal and social, sparking a bottom-up momentum that outlasts any training session.

A spoonful of fun: “If you allow people to play and experiment, and you reward and incentivize them to do that, then the fears go away. If you share your prompts in the prompt library for your specific role, then people get excited to knowledge share and try things out. The conversation gains its own momentum.”

When good tools meet old fears: Wright shares an example of a promising AI pilot that never gained traction because employees feared the tool might replace their roles. “It’s a textbook case of what happens when you don’t create psychological safety or a clear path for upskilling,” he says.

Get the system right, and you unlock a far more sophisticated workforce strategy. For Wright, this reframes the primary challenge around proactively managing a massive talent transition. To do so, leaders need a granular understanding of their workforce, distinguishing between roles that need minor upskilling versus those requiring complete reskilling.

The talent census: “You’ve got to be really clear on understanding your workforce and capability requirements. You’ve got to know what your workforce looks like by role and by individual,” Wright explains.

While Wright is an “AI optimist,” his vision is grounded in a pragmatic understanding of the technology’s limits. His guiding principle is built around a simple metaphor: AI is a copilot, not an autopilot. It augments capability, but human judgment remains non-negotiable. He practices this daily at his own firm, which he notes has a “massive oversight” process.

“Using AI is like doing research; you just do it faster,” he explains. “But you’ve still got to go in and look at it, review it, and interrogate the data.”

If you allow people to play and experiment, and you reward and incentivize them to do that, then the fears go away. If you share your prompts in the prompt library for your specific role, then people get excited to knowledge share and try things out. The conversation gains its own momentum.

Darryl Wright

National Lead for Leadership, Talent and Future of Work

Ernst & Young

If you allow people to play and experiment, and you reward and incentivize them to do that, then the fears go away. If you share your prompts in the prompt library for your specific role, then people get excited to knowledge share and try things out. The conversation gains its own momentum.

Darryl Wright

National Lead for Leadership, Talent and Future of Work

Ernst & Young

Related articles

TL;DR

Darryl Wright, Ernst & Young’s National Lead for Leadership, Talent, & Future of Work, explains why AI adoption is about changing the systems people operate in.

His approach starts with leadership, creating an immersive and psychologically safe program for executives to learn about AI long before any company-wide rollout.

Wright recommends sparking bottom-up momentum by making learning personal and social, incentivizing employees to experiment and share their findings.

He stresses the need to proactively manage the workforce by understanding who needs upskilling versus reskilling.

Darryl Wright

Ernst & Young

National Lead for Leadership, Talent, and Future of Work

National Lead for Leadership, Talent, and Future of Work

Most companies are getting AI adoption backward. They spend months trying to shift employee mindsets while the systems around them stay rooted in the past. Transformation stalls because behavior follows structure, not slogans, and the outcome is a workforce fluent in AI buzzwords but left without the tools to turn theory into action.

Darryl Wright has spent his career watching how big ideas collide with old systems. As Partner at Ernst & Young and the firm’s National Lead for Leadership, Talent, and Future of Work, he’s seen how even the boldest transformation efforts stall when structure lags behind ambition. Drawing on decades of experience at Scotiabank and Duke Corporate Education, Wright’s philosophy is built on the idea that you can’t train people into new habits inside of old systems.

“You can’t train people into new habits inside old systems. Before you ask employees to think differently, you have to rebuild the environment that shapes how they work. That means creating safe spaces for leaders to learn, demystifying the technology, and building confidence long before a single use case hits the floor. Real transformation starts with the system, not the slogans,” says Wright.

Don’t blame the player: Wright argues that true change has less to do with attitude and more to do with architecture. “You can’t change how people think in a workshop and then send them back into structures that reward the old way of working,” he explains. “You have to redesign the system that their new mindset will live in.”

To counter the fear and resistance that can hinder progress, Wright’s approach begins at the top. He advocates for creating a psychologically safe space for leaders to learn long before any company-wide rollout. By building a clear, well-governed framework first, his goal is to take the mystery out of AI and replace anxiety with confidence.

Learn before you leap: “We designed an immersive three-day program that gave executives a safe space to learn and ask questions. Day one was about the art of the possible, showing how others were already using AI to solve real problems. Day two was deep knowledge building, breaking down how the technology actually works. Day three focused on culture and collaboration. We didn’t talk about use cases at all—it was entirely about the environment these leaders would need to create,” Wright explains.

Darryl Wright

Ernst & Young

National Lead for Leadership, Talent and Future of Work

National Lead for Leadership, Talent and Future of Work

Once leadership is aligned, the focus then turns to translating that top-down strategy into bottom-up engagement. A key, Wright said, is to make learning personal and social, sparking a bottom-up momentum that outlasts any training session.

A spoonful of fun: “If you allow people to play and experiment, and you reward and incentivize them to do that, then the fears go away. If you share your prompts in the prompt library for your specific role, then people get excited to knowledge share and try things out. The conversation gains its own momentum.”

When good tools meet old fears: Wright shares an example of a promising AI pilot that never gained traction because employees feared the tool might replace their roles. “It’s a textbook case of what happens when you don’t create psychological safety or a clear path for upskilling,” he says.

Get the system right, and you unlock a far more sophisticated workforce strategy. For Wright, this reframes the primary challenge around proactively managing a massive talent transition. To do so, leaders need a granular understanding of their workforce, distinguishing between roles that need minor upskilling versus those requiring complete reskilling.

The talent census: “You’ve got to be really clear on understanding your workforce and capability requirements. You’ve got to know what your workforce looks like by role and by individual,” Wright explains.

While Wright is an “AI optimist,” his vision is grounded in a pragmatic understanding of the technology’s limits. His guiding principle is built around a simple metaphor: AI is a copilot, not an autopilot. It augments capability, but human judgment remains non-negotiable. He practices this daily at his own firm, which he notes has a “massive oversight” process.

“Using AI is like doing research; you just do it faster,” he explains. “But you’ve still got to go in and look at it, review it, and interrogate the data.”